Type-Driven Development in Rust (Rust 2021 Edition) – A Comprehensive Guide

Executive Summary

Type-Driven Development (TDD) is an approach where types are the foundation of program design. Instead of writing tests first (as in test-driven development), developers specify rich type constraints and let the compiler enforce program correctness. In Rust 2021, TDD means leveraging Rust’s powerful type system (ownership, lifetimes, traits, etc.) to ensure reliability and safety at compile time. This guide provides a practical and comprehensive roadmap to TDD in Rust, grounded in academic research and industrial best practices. We cover historical and theoretical foundations (e.g. dependent, refinement, linear, and session types), core Rust type system features (ownership, traits, generics, algebraic data types, etc.), and compare Rust’s approach with other languages (Haskell, OCaml, Scala, TypeScript, Idris, Agda). We also survey empirical evidence (like defect reduction and productivity gains), discuss tools (Rust Analyzer, Clippy, property-based testing, formal verification), and explore applications in systems programming, embedded, WebAssembly, blockchain, concurrency, and security. Practical sections include getting started tips, Rust design patterns for type safety, refactoring techniques to make “illegal states unrepresentable,” best practices for API design, and future directions in type systems. A glossary of terms and references to key papers (POPL, PLDI, ICFP, OOPSLA, etc.) are provided. In summary, this guide shows how Rust’s type-driven development can catch bugs early, yield robust code, and give developers confidence that “if it compiles, it works,” all while maintaining zero-cost abstractions.

Historical Background

Modern type-driven development has deep roots in the history of programming languages. Type systems emerged as a formal way to classify and constrain program behavior, preventing certain classes of errors at compile time. In the 1970s, languages like ML (MetaLanguage) introduced Hindley-Milner type inference, proving that static types could be both expressive and convenient. Robin Milner’s work on ML and the polymorphic lambda calculus laid the groundwork for treating types as a fundamental tool for program verification. Over the following decades, academic research increasingly viewed types as specifications: under the Curry-Howard correspondence, a well-typed program can be seen as a proof that certain errors (e.g. type mismatches) are impossible at runtime. This idea evolved into richer type disciplines capable of expressing more program properties.

By the 1990s and 2000s, researchers began pushing types beyond simple classification of integers vs. strings. Dependent types (types that depend on values) gained traction in proof assistants and experimental languages, allowing compilers to verify properties like array bounds or sortedness of lists. At the same time, refinement types were proposed to attach logical predicates to types (for example, a type of “non-zero integers” that excludes zero), enabling more lightweight verification integrated with programming (related concepts/projects, e.g., Liquid Types). The goal was to catch even logical errors at compile time without full theorem-proving. Languages and systems like Dependent ML, ESC/Java, and later Liquid Types for C and Haskell explored this space.

In parallel, industry languages were grappling with reliability issues caused by untyped or weakly-typed code. The famous “billion-dollar mistake” (Tony Hoare’s term for null pointers) underscored how the absence of types to rule out invalid states (like null dereferences) led to countless runtime errors. The mantra “make illegal states unrepresentable” gained popularity: if the type system forbids a program state that would be invalid, then the program can’t even compile with that bug. Functional programming communities using ML, Haskell, and OCaml demonstrated how algebraic data types and pattern matching could encode complex invariants, essentially eliminating entire categories of bugs (e.g. forgetting a case in a state machine). Companies like Jane Street capitalized on this by using OCaml’s strong typing to trade runtime checks for compile-time guarantees.

By the 2010s, type-driven development became more mainstream. Idris and Agda (dependently-typed languages) showed that one could write the types first and use interactive prompts to develop the code, a technique literally called “Type-Driven Development” by Idris’s creator Edwin Brady. At the same time, more practical languages sought to balance type safety with developer ergonomics. Scala introduced an advanced type system on the JVM, TypeScript added gradual static typing to JavaScript to catch errors in large codebases, and F# brought ML-style typing to the .NET platform.

However, a gap remained in systems programming: languages like C/C++ had minimal type safety for low-level memory manipulation, leading to memory corruption bugs, while safer languages (Java, etc.) incurred performance costs with garbage collection. Rust (begun around 2010 and first released in 2015) emerged to bridge this gap. Rust’s designers, influenced by the research language Cyclone (a safe C variant) and concepts from linear types, created a unique ownership-based type system. Rust promised memory safety without garbage collection by leveraging static types to enforce ownership, borrowing, and lifetimes. This novel approach meant that many bugs (null pointers, double frees, data races) were proactively prevented by the compiler. Rust’s development was informed by academic work (e.g. region-based memory management, linear logic, typestates) and was validated by formal proofs later (e.g. the RustBelt project). By the Rust 2021 Edition, the language’s type system had matured to include features like const generics (for compile-time numeric parameters) and a strong trait system, making Rust a flagship example of type-driven development in practice.

Today, type-driven development in Rust means using the type system as a tool for design, documentation, and verification. The historical journey—from early type theory to modern Rust—shows an increasing belief that well-designed type systems can eliminate bugs early and improve software quality. The following sections will delve into the core principles of type-driven development, both generally and in Rust specifics, demonstrating how decades of research have materialized in Rust 2021’s powerful type system.

Core Principles and Methodologies of Type-Driven Development

Type-Driven Development encompasses several core principles and type system methodologies that have been developed in both academia and practice. We summarize these key concepts below, explaining their theoretical basis and how they relate to writing safer, more correct software. Throughout, we’ll touch on Rust’s relationship to these ideas (where it adopts, approximates, or forgoes them).

Dependent Types

Dependent types are types that depend on values. In a dependently-typed language, you can have a type that encodes an arbitrary property of a value, and the compiler will treat proving that property as part of type-checking. For example, one could define a vector type parameterized by its length (an integer value). A function to get the nth element of a vector might have type index_of : (v : Vector<T, n>, i : Fin n) -> T, where Fin n is the type of “a valid index less than n”. In this way, it becomes impossible to index past the end of the array – such an operation would be a type error, not just a runtime error.

Dependent types can thus make extremely strong guarantees; they subsume many other systems (a refinement type or a simple type is a restricted dependent type). Languages like Agda, Idris, and Coq support full dependent types, allowing developers to encode complex invariants (sortedness of a list, balanced binary trees, protocol compliance, etc.) in the types themselves. By the Curry-Howard correspondence, writing a program that type-checks in these languages can be akin to writing a formal proof of correctness. For instance, Idris’s motto of “Type-Driven Development” refers to writing rich type signatures first (as specifications) and then using the compiler’s feedback to iteratively implement the code. This style is powerful – it’s used in critical systems where correctness is paramount (e.g. cryptographic proofs, certified compilers like CompCert) – but it comes at the cost of complexity. Type-checking is undecidable in the most general settings, so practical dependent type languages restrict what the compiler will attempt to prove automatically, often requiring manual proofs or annotations from the developer.

Rust does not have dependent types in the general sense – you cannot write a type that says “an array of length n” for an arbitrary n and have the compiler derive a proof obligation. Rust’s type system is designed to be decidable and fast for compilation, so it sticks to simpler forms of typing. However, Rust does provide some limited dependent-like capabilities via const generics (stabilized in Rust 2021). Const generics allow types to be parameterized by constant values (e.g. struct ArrayVec<T, const N: usize> can represent a vector with a maximum capacity N known at compile-time). With const generics, one can create APIs that ensure certain size relationships (for example, implement Add for Matrix<m,n> and Matrix<n,p> producing Matrix<m,p> with dimension n checked at compile time). This is a form of type-level computation on values, though far less expressive than what full dependent types in Idris/Agda offer.

In practice, if a Rust developer needs dependent-type-like guarantees (say, ensuring an integer is non-zero or a string matches a regex pattern), they typically enforce it with runtime checks and then encapsulate the value in a newtype so that subsequent code can assume the invariant. (We’ll see examples of this in “illegal states unrepresentable”.) Research is ongoing into bringing more dependent typing to Rust in a sound way, but for now Rust leans on other methodologies for strong static guarantees.

Refinement Types and Liquid Types

Refinement types attach logical predicates to types to create subsets of values with certain properties. For instance, one might refine the type i32 into a type {v: i32 | v != 0} meaning “an i32 that is not zero”. If a function requires a non-zero integer, its type can be specified to take that refined type, and the compiler (with help from a theorem solver) will ensure that any caller provides a non-zero value (related concepts/projects, e.g., Liquid Types). Unlike full dependent types, refinement types are usually decided by automated theorem provers (SMT solvers) rather than requiring manual proofs. They strike a balance: expressive enough to catch many bugs (like division-by-zero, array out-of-bounds, null dereference), but restricted enough to remain checkable automatically.

A prominent example is Liquid Types/Liquid Haskell. Liquid Haskell (Vazou et al., 2014) extends Haskell with refinement types and uses an SMT solver to verify conditions at compile time. For instance, one can specify that a list length is non-negative or that a binary search function actually returns an index within bounds, and the Liquid Haskell checker will prove those conditions or emit errors. This approach has been shown to catch many subtle bugs without requiring full-on proofs from the programmer – the predicates are inferred or relatively simple, and the heavy lifting is done by the solver (related concepts/projects, e.g., Liquid Types). Refinement types have been explored for languages like C and ML in the past (e.g., work by Freeman & Pfenning in the 1990s), but Liquid Haskell popularized the approach in a modern setting. Another project, *F* (F star)**, uses a refinement-based dependent type system for verification of ML and C code, indicating the practical value of this idea in security-critical software.

Rust does not natively support refinement types (there’s no syntax to put a predicate on a type in a function signature). However, the concept is very much in line with Rust’s philosophy of catching errors early. Rust developers often simulate refinement types by combining newtypes and run-time checks. For example, consider ensuring a value is non-zero: one might have a NonZeroI32 type (indeed Rust’s standard library provides types like NonZeroU32) which can only be constructed from an i32 after checking it isn’t zero. Once constructed, a NonZeroI32 is guaranteed (by its internal invariant) to be non-zero, and you don’t need to check again. This is an example of a design pattern for type-driven development: use types to enforce an invariant one time at the boundary (construction) so that you don’t have to continually handle “illegal” cases internally.

There is also active research into bringing refinement types to Rust more directly. Liquid Rust (Flux) is a research prototype that applies Liquid Types to Rust. It allows one to write specifications as attributes, like #[requires(x > 0)] fn f(x: i32) -> i32 { ... }, and have a checker verify that the function is only called with x > 0 and perhaps that it ensures certain postconditions (Flux project, Liquid Types inspiration). Under the hood, this integrates with Rust’s borrow checker and uses SMT solving to reason about values and lifetimes. While not (yet) part of stable Rust, such work demonstrates the potential to extend Rust’s type-driven development capabilities to cover an even wider array of correctness properties (array indices, string formats, etc.) at compile time.

In summary, refinement types enhance static checking by introducing logical predicates, and while Rust proper doesn’t have them as a built-in feature, Rust developers achieve similar ends through careful API design (ensuring certain values cannot be constructed invalidly). The philosophy of refinement – moving checks from runtime to compile-time whenever possible – is very much aligned with Rust’s goals.

Linear Types and Affine Types (Ownership)

Linear types come from linear logic and enforce the rule that values are used exactly once. In programming, a linear type system ensures that if a function takes a linear resource, that resource is consumed exactly one time along every codepath – it can’t be discarded unused and cannot be duplicated. This concept is extremely useful for modeling resources (e.g., file handles, locks, memory) because it guarantees no leaks or double frees: you must hand off or consume a resource exactly one time. Variants of linear type systems allow affine usage (at most once, meaning you can drop a value without using it, which is often practical for programming) or other constraints on aliasing.

Rust’s ownership and borrowing system is essentially a form of affine type system integrated with lifetime tracking. Each value in Rust has a single owning pointer at any given time, and the type system ensures you can’t clone or alias mutable data arbitrarily. If you move a value, the source can’t be used again (use-after-move errors are compile-time errors). If you borrow a value immutably (&T), you can have multiple readers but no writers; if you borrow it mutably (&mut T, which is a linear/unique reference), you get exclusive access until the borrow ends. These rules mean that certain linear logic principles are enforced: for example, you cannot double free because you cannot have two owning pointers to the same allocation – freeing moves ownership to the allocator. Rust’s types (in conjunction with the borrow checker) thus ensure memory safety by static analysis (Jung et al., POPL 2018). Indeed, Rust’s type system ensures memory safety (no use-after-free, no double free, no data race) as long as unsafe code is correctly encapsulated (Jung et al., POPL 2018). This guarantee is comparable to what linear type systems in research languages aim to provide, but Rust manages it in a way that is ergonomic enough for practical coding.

Linear types in pure form have seen adoption in other languages too. Haskell, for instance, introduced linear types extension (GHC 9 with LinearTypes) allowing functions to demand linear usage of arguments (useful for ensuring functions like file handlers properly close files exactly once). The literature (e.g., Wadler, 1990s) often touted that linear types could “change the world” by providing a way to reason about resources and side effects in functional languages. Rust’s success in systematizing these ideas for a systems language is a major milestone in that line of research. It was heavily influenced by earlier work like Cyclone (a research C variant that enforced region-based memory management and some linear constraints) and academic theories of ownership types and alias types from the mid-2000s.

One important distinction: Rust’s type system is affine (resources can be used at most once, meaning you are allowed to not use something and let it drop). This is why you can, for example, create a value and then not explicitly use it – Rust will drop it (which is a use of the resource for deallocation) implicitly. A purely linear system would force you to use it exactly once, which can be burdensome (you’d have to call an explicit dispose or do something with it). Rust chooses pragmatism here, making it easy to write code that might not always “use” a value (especially in error handling paths) without violating linear rules.

In practice, when we talk about type-driven development in Rust, ownership/borrowing is the crown jewel. It’s what allows Rust to statically enforce thread safety (no data races) and memory safety. For example, Rust’s types incorporate the traits Send and Sync to mark which types are safe to pass to or share between threads. These traits are automatically implemented by the compiler for types that obey ownership rules (no interior mutability without synchronization, etc.), and they prevent data from being sent across thread boundaries unsafely. This is a form of compile-time guarantee about concurrent use of resources, deeply rooted in type system concepts. It’s worth noting that Rust’s approach, while very powerful, is also one of the main learning curve issues for newcomers – it requires understanding linear/affine usage patterns and sometimes adjusting algorithms to fit a model where values have a single owner or controlled borrowing. But once mastered, it allows what Rust calls “fearless concurrency”: the ability to write concurrent code and be confident that the compiler has eliminated the possibility of data races by construction.

Session Types

Session types are a typing discipline from the realm of concurrent and distributed systems. A session type describes a protocol for communication (sending and receiving messages in sequence), and the type system ensures that both parties in a communication adhere to this protocol. For example, you can have a session type that says “first send an Int, then receive a String, then end”, and a complementary type on the other side that says “first receive an Int, then send a String, then end”. If a program’s communication pattern conforms to these types, you won’t have mismatch errors at runtime (like one side expecting a message the other side never sends). Session types can even ensure that channels are used linearly (each message exactly once in the correct order), merging ideas from linear types and protocol compliance.

While session types have been studied since the 1990s (Honda et al., 1993 introduced them), they haven’t made it into many mainstream languages as built-in features. They are more often seen in research languages or libraries. Rust does not natively support session types, but interestingly, Rust’s ownership and affine types can help implement them in libraries. Because Rust can ensure that a communication channel is used by exactly one owner at a time, you can encode stateful protocols using the typestate pattern (described later) and generics. For instance, there have been experimental crates where a channel has a phantom type indicating the next message type expected; sending on the channel consumes it and returns a channel with the next state type. This way, if you accidentally try to send the wrong type of message or send out of order, it’s a type error. Such libraries leverage Rust’s compile-time guarantees to enforce a form of session typing without native language support.

In practice, session types are not commonly used in everyday Rust programming (they remain a bit niche and can be verbose to encode). However, the principle is useful for certain domains – e.g., ensuring a network client and server agree on a handshake sequence. More broadly, session types exemplify the make illegal states unrepresentable ethos: a protocol state that’s out-of-order is an illegal state, and by typing the communication, you ensure the program cannot even be written (compiled) to perform an out-of-order action. As asynchronous programming and distributed systems become more important, there’s active research on integrating these protocols into type systems of languages like Rust and Go (which uses channels and could theoretically benefit from similar ideas).

Gradual Typing

Gradual typing refers to systems that allow a mix of static and dynamic typing in the same program. The idea (pioneered by Siek and Taha in the mid-2000s) is to let parts of the code be dynamically typed (for flexibility or ease of writing) and other parts be statically typed, and to have a smooth path to refactor one to the other. Languages like TypeScript (for JavaScript) and Python with type hints (PEP 484) embody this approach. You can start with no types (all dynamic) and gradually add type annotations; the compiler or checker will enforce those where present and insert runtime checks at the boundaries between typed and untyped code.

Gradual typing is an important concept in industry because it provides a migration path for large codebases that started in untyped languages. TypeScript, for example, has been enormously successful in catching errors in what used to be plain JavaScript code, all while allowing developers to opt in to as much strictness as they want. However, gradually typed systems typically cannot guarantee the same level of safety as a fully statically typed language – they often have “escape hatches” (like TypeScript’s any type) and may insert runtime type checks that could fail if you mix types incorrectly. In TypeScript’s case, the type system is intentionally not sound (to avoid over-restricting JS patterns), meaning there are ways to fool it and cause runtime errors even when the compiler is satisfied (e.g., due to coerced types or any usage).

Rust, by contrast, is fully statically typed (except for runtime type information with Any trait which is not for everyday use). There is no gradual typing in Rust – you cannot choose to bypass the type checker on one part of your code. Rust requires that everything checks out at compile time, and it does not have a built-in dynamic type that you can use to turn off checking. (You can use enums to have sum types and variants, or trait objects for dynamic dispatch, but these are still statically type-safe, just more flexible in terms of behavior.) This means Rust leans toward the “strongly, statically typed” end of the spectrum with no middle ground. The benefit is that you get maximum safety guarantees (if it compiled, you have a very high assurance of type correctness at runtime), but the downside is you have to appease the compiler upfront. In dynamic languages or gradually typed settings, you might prototype faster and only later add types, whereas in Rust you design with types from the start.

For a Rust developer, the relevance of gradual typing is mostly in comparison to languages like Python or TypeScript. If you’re coming from a dynamically typed background, Rust will feel very strict – you can’t just throw any two things together; you must design proper data structures and traits. However, this up-front work pays off in fewer runtime errors. Empirical studies in industry (e.g., at Facebook with Hack/PHP, at Microsoft with TypeScript adoption) have shown significant reductions in certain bug categories after adding static types. So while Rust doesn’t do gradual typing, the success of TypeScript and others in catching errors helps make the case for Rust’s approach in domains like web programming (e.g., using Rust for WebAssembly on the frontend, or in backend servers where TypeScript might otherwise be used for quick development).

“Making Illegal States Unrepresentable”

This phrase encapsulates the essence of type-driven development. The idea is to use the type system to eliminate the possibility of representing a state that your program logic says should never occur. If it can’t be represented, you never have to write code to handle it, nor will it ever slip through unhandled. This principle often involves using algebraic data types (enums and structs in Rust) to precisely model the problem domain.

For example, suppose you have a simple workflow: a user can be LoggedOut or LoggedIn, and certain actions (like “compose message”) are only valid when logged in. In an untyped or loosely typed scenario, you might represent the user state as a boolean or just assume by context. But that could lead to runtime errors if you call compose_message when the user is not logged in. With a type-driven approach, you’d represent this as an enum SessionState { LoggedOut, LoggedIn(UserInfo) } and only call compose_message with a LoggedIn variant (perhaps by making it a method on the LoggedIn struct). The compiler will enforce that you handle both cases (exhaustive match) and that you don’t mistakenly call a function with the wrong state. Thus, the invalid state “user not logged in but attempting to compose” is not representable as a well-typed function call – it’s caught at compile time.

Rust’s algebraic data types (ADTs) and pattern matching are instrumental in this regard. Enums in Rust allow you to define sum types (either A or B or C). Each variant can carry data. Pattern matching forces you to consider all variants, or else the code won’t compile (for non-exhaustive matches, Rust gives a warning or error unless you include a catch-all). This means you can design your types so that every possible variant represents a valid state, and there is no “catch-all invalid” state left unrepresented. Contrast this with something like using null or a magic value – those are representable in a less disciplined type system and thus you need runtime checks. In Rust, for instance, if something could be absent, you use Option<T> rather than null; the type Option<T> forces you (via the type checker) to consider the None case. You cannot accidentally forget to handle the None case because if you try to use an Option<T> as a T without unwrapping it, it’s a type error. This is a direct application of making an illegal state (using a value that isn’t there) unrepresentable (you can’t have a T if you only have an Option<T> without explicitly handling the None).

Another powerful tool is the newtype pattern: wrapping a primitive type in a new type to give it semantic meaning and constraints. For instance, if you have various IDs in your program (UserId, OrderId, etc.), in many languages they might all just be integers or strings, and it’s easy to mix them up by passing an OrderId where a UserId was needed. In Rust, you can make a struct UserId(u64); struct OrderId(u64); – now these are distinct types. The compiler will error if you mix them up. You have made the illegal state of “using an OrderId where a UserId should be” unrepresentable because the types don’t allow it. This may seem simple, but it prevents entire classes of bugs especially in large codebases where such mix-ups inevitably happen.

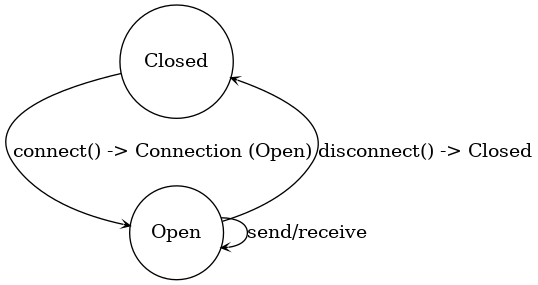

One more example: consider a state machine like a network connection that must be Opened before it can be Closed, and you shouldn’t be able to send data when it’s closed. You can model this with typestates in Rust (see below and the figure description). By having separate types for Connection<Closed> and Connection<Open>, with methods that consume one and produce the other (e.g. connect() turns a Connection<Closed> into a Connection<Open>), you ensure that it’s impossible to call send() on a Connection<Closed> – such a call would be a type error, not something you need to manually guard against at runtime. This pattern is used, for example, in some builders (ensuring required fields are set before build) and in embedded HAL (Hardware Abstraction Layer) APIs to ensure devices are initialized before use.

(Figure Description: A state machine diagram showing a simple connection using typestates. The Closed and Open states are represented by distinct types, and methods transition the generic Connection<State> between states. Arrows show transitions: open() moves from Closed to Open, close() moves from Open back to Closed. Methods like send() or receive() are only available on the Open state type. This makes it impossible, for example, to call send/receive on a Closed connection, because no such method exists for the Closed state type.)

In summary, “making illegal states unrepresentable” is a guiding principle that often leads to designing more types (sometimes small wrapper types or nuanced enums) in order to capture distinctions that prevent misuse. Rust’s type system, with enums, structs (including tuple structs/newtypes), privacy (to prevent creating invalid instances from outside a module), and traits, provides the tools to do this effectively. It encourages a mindset where you ask: can I redesign the types such that this bug cannot happen? Often the answer is yes, at the cost of a bit more type annotation or code structure, but with the benefit of eliminating certain tests and runtime checks entirely (since the invariant is enforced by the compiler). This is arguably the heart of type-driven development: design first with types, and many correctness concerns will be resolved before running a single line of code.

Type-Driven Development in Rust: Core Features

Having covered the general principles, we now focus on how Rust (as of the 2021 Edition) implements and leverages these concepts. Rust’s type system has several distinctive features that enable type-driven development, making it possible to write highly safe and expressive code without sacrificing performance. Below we explore the core Rust features that a type-driven approach uses:

Ownership, Borrowing, and Lifetimes

At the core of Rust is the ownership model. Each value in Rust has a single owner, and when that owner goes out of scope, the value is dropped (deallocated). You can move ownership (e.g., returning a value from a function transfers it to the caller), but after moving, the previous handle is invalid. These rules are checked at compile time.

In addition, Rust allows borrowing: you can have references to values. References come in two flavors – &T (shared, immutable borrow) and &mut T (exclusive, mutable borrow). Critically, Rust ensures at compile time that you cannot have a &mut T while any other references to the same data exist (no aliasing of mutable data), and you cannot have a &T alive while the original is moved or dropped (no dangling pointers). It achieves this through a system of lifetimes and borrow checking. Lifetimes are annotations (often elided by the compiler) that label the scope during which a reference is valid. The compiler uses a borrow checker to ensure references always point to valid data. For example, if you create a reference to a local variable and return it from a function, that’s not allowed because the local goes out of scope – the compiler will refuse, preventing a dangling pointer. These checks are all done statically. Rust 2018 introduced non-lexical lifetimes (NLL) which made the analysis more flexible (the compiler can sometimes shorten lifetimes to allow more programs to compile while still being safe), and Rust 2021 continues with those improvements.

From a type-driven dev perspective, ownership and lifetimes mean many memory safety issues are simply impossible in well-typed Rust code. You don’t need a garbage collector to prevent use-after-free – the type system ensures such situations are errors at compile time. Data races (two threads mutating the same memory concurrently) are also ruled out, because to share data between threads, it must be types that implement Sync (meaning it’s safe to share immutably) and any mutation must go through an Arc<Mutex<T>> or similar, which enforces exclusion also via types. Essentially, Rust turns these concurrency and memory problems into type errors, caught early.

As an example of evidence, Microsoft and Google have both noted that a huge percentage of security bugs (up to 70%) in systems programs are due to memory safety issues – exactly the issues Rust’s type system addresses (cf. Jung et al., POPL 2018). By using Rust in systems that were historically in C/C++, companies have observed significant drops in those classes of bugs (e.g., Android’s adoption of Rust for low-level code led to zero memory safety vulnerabilities in the new Rust components over a year, whereas C/C++ components continued to have such bugs).

For a Rust developer, understanding ownership and lifetimes is crucial to succeed in type-driven design. At first it may feel like the compiler is fighting you (“cannot borrow because it’s already borrowed” errors, etc.), but these are essentially the compiler proving to you that some path in your code could lead to a bad state. By redesigning the code (often by changing how data is structured or how long things live), you align with the ownership model and the errors disappear – and so do the potential bugs those errors were warning about. In this sense, the borrow checker is your ally in TDD, acting as a strict but helpful guide to writing memory-safe code.

Traits and Generics (Type Constraints)

Rust’s traits are a cornerstone of its type system, providing a mechanism for ad-hoc polymorphism (interfaces) and constraints on types. A trait in Rust is similar to an interface in other languages – it defines a set of methods (and possibly associated types or constants) that a type can implement. Traits enable static dispatch (monomorphized generics) and dynamic dispatch (dyn Trait) where needed.

In type-driven development, traits allow you to encode certain capabilities or properties as types. For example, one might have a trait Sortable which guarantees that a type has an ordering, or a trait Buffer that ensures something can be written to/read from. Generic types and functions in Rust use traits as bounds to specify what operations or properties the type parameters must have. For instance, a generic sort function might require T: Ord (meaning the elements can be compared and ordered). The compiler will enforce that any type substituted for T implements the Ord trait. This is a form of compile-time contract: you cannot call sort() on a vector of a type that isn’t orderable, because it wouldn’t compile. This catches errors where you might call the wrong function or use a type in an invalid way.

Traits can also be used to prevent misuse. Consider a resource that can only be accessed under certain conditions – you might model it by a trait that is only implemented for types that meet those conditions. A real-world example is Rust’s lifetimes and the Send/Sync traits for threads: the compiler automatically implements Send for types that are safe to send to another thread (i.e., they don’t contain non-Send parts like raw pointers without synchronization). If you try to spawn a thread with a non-Send type, it won’t compile. So the trait system + generics is enforcing thread safety by preventing certain illegal combinations (non-thread-safe type in a thread) – again, a type-driven enforcement of a correctness property.

Rust 2021 improved on the trait system with features like const generics and (on nightly/stabilizing) generic associated types, allowing even more expressive constraints at compile time. It’s worth noting that Rust (currently) lacks higher-kinded types (HKTs), which Haskell and Scala have and which allow things like “a trait for all F<_> with certain properties” (although you can emulate some patterns with associated types or by hand). This is one limitation of Rust’s type system compared to some others, but work is ongoing (the impl Trait and dyn Trait were steps, and there’s discussion of adding HKT in the future).

Even without HKTs, Rust’s trait system has proven very powerful – one can use it to do some type-level programming, enforce invariants (via marker traits), and achieve zero-cost abstractions by inlining and monomorphization. From a design standpoint, thinking in terms of traits and generics is key to writing reusable Rust code. It allows you to capture the essence of an operation in the type system. For example, the fact that you can implement Iterator for a type means you can use it in a for-loop. That’s a contract checked at compile time (does it implement Iterator?). If you accidentally try to iterate over something that isn’t iterable, you’ll get a clear compile error. In other words, traits allow semantic constraints (like “iterable”, “comparable”, “cloneable”) to be part of the static program structure. Many design patterns in Rust (such as conversion traits From/Into, the Drop trait for custom destructors, etc.) rely on this type-driven approach to ensure consistency and correctness across the codebase.

Algebraic Data Types (Enums and Structs) and Pattern Matching

Rust’s data types – particularly structs (product types) and enums (sum types) – enable developers to construct data models that mirror the problem domain closely. Together with pattern matching, they provide both expressiveness and safety. We touched on this in “illegal states unrepresentable,” but let’s delve a bit deeper.

A struct in Rust is a collection of named fields (or tuple elements). It’s analogous to a record or a simple class (with no methods by itself). Structs are useful for bundling data that belongs together. A tuple struct or newtype is a struct with a single field, often used to create a distinct type from an existing type (for type safety reasons or to implement different traits).

An enum in Rust represents a choice between variants. Each variant can have data associated with it. Enums are extremely powerful for modeling state that can vary. For example, Rust’s standard library defines:

Option<T>: eitherSome(T)orNone, representing an optional value.Result<T, E>: eitherOk(T)for success orErr(E)for error, representing the result of an operation that might fail.

These types eliminate the need for null or exception mechanisms by making the possibility of absence or error part of the type signature of functions. You must handle both cases (as the type demands it). Pattern matching is the way you typically unpack enums:

match result {

Ok(val) => { /* use val */ },

Err(e) => { /* handle error */ },

}If you forget an Err case, Rust will refuse to compile (unless you add a _ => panic!() or something, which is an explicit choice to ignore it). This is significantly safer than, say, ignoring an error code in C or catching exceptions generically – the compiler is keeping you honest.

For type-driven development, the combination of ADTs and pattern matching is a dream: you can model any finite state or differentiated set of cases, and the language forces you to consider them. There’s also exhaustiveness checking and inaccessibility checking in pattern matches: Rust will tell you if a match might be non-exhaustive (some variant not covered), and it will warn if a pattern is impossible (say you match variant A in one arm and variant B in another, and then have a third arm matching “anything” – the compiler will warn that “anything” only covers variant C if that’s the only one left, so you might as well write C explicitly). This gives a very high level of assurance that your code handles all possible inputs.

A practical Rust pattern is to use enums to represent state machines or events, and use pattern matching in the logic. It yields code that is both readable (no lengthy if-else chains with magic constants, but rather clear named cases) and reliable (if you add a new variant, the compiler will flag every place you need to handle it, acting like a to-do list for refactoring). This is a key difference when refactoring: in an untyped setting, adding a new state might require searching through code for places to update (and you might miss some), but in Rust the compiler will catch every missed case for you.

Zero-Cost Abstractions

Rust is often advertised as having “zero-cost abstractions,” meaning you can create high-level constructs that, when compiled, produce code as efficient as hand-written low-level C. This concept, originally popularized in C++ (the idea that abstractions shouldn’t incur runtime overhead), is achieved in Rust through its monomorphization of generics, inlining, and careful design of the language (no implicit boxing or hidden virtual tables unless you use dynamic traits explicitly).

For type-driven development, zero-cost abstractions are crucial because they encourage developers to use the type system freely without worrying that doing so will bloat the executable or slow down the program. For example, if you use an enum to distinguish states, at runtime that’s just an integer tag plus data – very minimal overhead. If you use generics and traits to abstract over types, the compiler generates specialized code for each concrete type (in the case of static dispatch), meaning the calls get inlined and optimized as if you wrote them specifically for that type.

A concrete example: the Iterator trait in Rust. You can use a chain of iterator adaptors (map, filter, etc.) which, from a code perspective, are many layers of abstraction (each an object with a next() method). However, since they are generics, the compiler can fuse them and optimize the hell out of them, often resulting in machine code similar to a simple loop with if statements. Thus, you got the benefit of a clear abstraction (a sequence of transformations) with zero runtime cost overhead. This is type-driven development in the sense that the abstraction (the trait and generic implementations) allowed you to write high-level code, and the compiler’s strong backing of it through monomorphization means no performance penalty.

Rust encourages using types for safety even in performance-sensitive code because of this zero-cost principle. You can introduce newtypes or wrapper structs to enforce invariants, and as long as they are simple (containing a basic type) the compiler will likely optimize them out (or at worst, it’s an extra move of a primitive which is negligible). There’s no runtime reflection needed for enforcing type rules – it’s all compile-time, so it doesn’t cost CPU cycles in production. This is unlike some other languages where using a more complex type system or abstraction (say heavy use of interfaces or certain patterns) might introduce virtual dispatch or runtime checks; in Rust, you pay for what you use (if you use dyn Trait for runtime polymorphism, that’s a virtual call – but you opt into it; if you use static dispatch, it’s free of overhead).

The outcome is that Rust empowers developers to “turn the dial” on static safety to max without fear. Want to have 10 different newtypes for different IDs? Go ahead – it won’t slow the program (the machine code might treat them all as the same underlying integer type after optimization). Want to ensure at compile time that an array length matches some other input? Use const generics – it’s checked by the compiler and doesn’t exist at runtime. This alignment of performance with type safety is a huge selling point of Rust in domains like games, systems programming, and high-performance computing, where traditionally people would drop to C for speed and give up type safety.

Type-Level Programming (Const Generics, Phantom Types, etc.)

While Rust is not a dependently-typed language, it does offer ways to perform type-level programming – computations or logic that happen at compile time, influencing types or requiring certain types to exist. We’ve mentioned a few: const generics, which let you use integers or other const values as type parameters (like array lengths), and traits, which can act like functions on types (mapping a type to an associated type, etc.).

There are also phantom types and zero-sized types used to carry compile-time information. A phantom type is typically a generic type that doesn’t actually use one of its type parameters at runtime, but carries it for type-checking purposes. Rust even has a marker PhantomData to mark unused type parameters. This is often used for the typestate pattern or to attach units to numbers (e.g., a Length<Meters> vs Length<Feet> phantom type to prevent mixing units). Phantom types allow adding compile-time metadata without affecting runtime representation (the compiler knows to optimize out PhantomData as it has size zero). For example, you might have struct Connection<State> { ... PhantomData<State> ... } and never construct a real State object, but the compiler treats Connection<Open> and Connection<Closed> as distinct types – voila, typestates purely at compile time.

Const evaluability in Rust is another aspect: more functions and expressions are gaining the ability to run at compile time (const fn). This means you can compute things during compilation and bake them into types or constants. For instance, you could have a const fn compute_size(x: u32) -> usize { ... } and use it in an array type like [T; compute_size(5)]. Rust 2021 has const generics and a lot of const fn capabilities stabilized, although some advanced const evaluation (like heap allocations in const context) are still nightly features. The direction is clear: Rust is moving toward more powerful compile-time programming (similar to C++ constexpr, but without some of the footguns).

Macros in Rust (while not a type system feature per se) also contribute to type-driven development by allowing you to generate code based on patterns, which can sometimes be used to ensure certain invariants (for instance, generating an enum and impls from a list of variants, reducing human error, or implementing traits for multiple types through a macro). Procedural macros can even enforce certain patterns at compile time by examining the code (like a mini compiler plugin). These are more like meta-programming facilities, but they complement the type system by enabling domain-specific checks or boilerplate reduction that keeps the code consistent (and thus type-correct).

In summary, Rust gives you several tools to push computation to compile time and enrich your types with extra information (consts or phantom types). While it’s not as free-form as Haskell’s type-level programming (which can get very abstract with type families, etc.), it covers practical needs. And importantly, everything that happens at type level is checked by the compiler for consistency. Type-driven Rust development often means thinking: can I encode this rule as a trait or const such that misuse is a compile error? If yes, you do it, and you’ve saved a runtime check or potential bug. Examples include ensuring a certain protocol of function calls (with phantom types), doing size calculations at compile time (const generics) to avoid mistakes, or using the type system to differentiate units and contexts.

Unsafe Code and Encapsulation with Types

Rust’s pledge of safety has one caveat: unsafe code. The unsafe keyword allows you to perform operations that the compiler can’t guarantee to be safe (dereferencing raw pointers, calling foreign functions, accessing mutable static variables, etc.). Unsafe is necessary for implementing low-level primitives and interacting with hardware or other languages.

However, even unsafe code can be written in a type-driven way to minimize risk. The key idea is encapsulation: you confine unsafe code to small modules and wrap them in safe abstractions, using types to enforce the correct usage of those abstractions. A classic example is Rust’s Vec<T> (growable array) implementation. Internally, it uses raw pointers and manual allocation (unsafe operations) to manage the buffer. However, the Vec<T> API is safe because its methods are carefully designed to uphold invariants (like the pointer is valid and points to initialized elements within bounds). The type Vec<T> carries the information about its length and capacity and ensures (via methods) that you can’t push beyond capacity without reallocating, can’t index out of bounds (indexing does a check or returns an Option via get), etc. The unsafe code inside Vec is verified by Rust developers (and by things like MIRI or formal verification efforts), but as a user of Vec, you don’t deal with unsafe; the type and its methods handle it. This is a general pattern: use types to make unsafe interfaces safe.

Another example: Rust’s NonNull<T> type in the standard library is a wrapper around a raw pointer that is guaranteed to be non-null. Its constructor is NonNull::new(ptr: *mut T) -> Option<NonNull<T>> – it returns None if you pass a null pointer, so if you get a NonNull<T> you have a guarantee it’s not null. Then you can use it in other unsafe code that requires non-null pointers (avoiding one class of bugs). The existence of NonNull<T> as a distinct type means the programmer made “null is illegal” into the type system, rather than just assuming a raw pointer is non-null by convention. Even though working with NonNull<T> still requires unsafe when dereferencing (because it’s raw), it’s safer than a plain *mut T because of that invariant. This shows how making a new type to encapsulate an assumption can make reasoning in unsafe code easier.

Rust also relies on types to interface with foreign code (FFI). For example, if you’re calling C functions, you will mark functions as extern "C" and use types that mirror C types (c_int, *mut c_void, etc.). The act of binding foreign functions often involves unsafe (because calling them can’t be checked by Rust), but you typically wrap those calls in safe Rust functions that perhaps translate a raw result into an enum or use a newtype to represent a handle returned by C. This way, as soon as possible after crossing the FFI boundary, you convert into a Rust type that has invariants. All further code uses the safe Rust type. If something goes wrong, it likely goes wrong at that boundary and can be audited there.

In projects that require a lot of unsafe (like writing a kernel or interacting with hardware registers), it’s common to isolate unsafe in specific modules (maybe name them hw or ffi or arch_specific) and have the rest of the program use safe interfaces provided by those modules. By documenting invariants (e.g., “function X must be called before Y” or “you must not call Z after calling Y”), and ideally enforcing them with types, one can make large unsafe sections more tractable. The Rust community has also developed tools like MIRI (an interpreter that can catch some undefined behavior in unsafe code) and static analyzers to check unsafe code. Furthermore, the RustBelt project (Jung et al. 2018) gave a formal foundation for Rust’s unsafe, essentially proving that if you follow certain rules in unsafe code (called “safety contracts” of abstractions), the entire program remains memory safe (Jung et al., POPL 2018). This is an academic reassurance that Rust’s design (types + selective unsafe) is sound.

In essence, type-driven development in Rust doesn’t mean zero unsafe code – it means structuring code so that the unsafe parts are small, well-defined, and checked indirectly by the surrounding safe code’s types and invariants. When done right, you get most of the benefit of a fully safe language, but with the power to do low-level manipulations where needed (just like operating systems or game engines sometimes need to). It’s a marriage of formal guarantees and practical escape hatches, leaning on the type system to keep the escape hatches from wreaking havoc.

Comparisons to Other Languages

To put Rust’s approach in context, it’s helpful to compare it with other languages that have embraced type-driven philosophies to varying degrees. Each of these languages has different strengths and historical contexts, and understanding them can illuminate why Rust takes the path it does and what alternatives exist for certain type-driven goals.

Rust vs. Haskell

Haskell is a purely functional language with a very strong static type system. Both Rust and Haskell share a love for algebraic data types, pattern matching, and type classes (Haskell’s type classes are analogous to Rust’s traits). Haskell, however, goes further in some areas: it has higher-kinded types (HKTs), allowing type constructors to be abstracted (e.g. a Functor is a type class for types of kind * -> *). It also supports GADTs (Generalized Algebraic Data Types), providing more powerful pattern matching and type refinement capabilities – for instance, you can have an algebraic data type that in certain constructors carries proof that some type variable is a specific type. This can achieve some dependent-type-like behavior within Haskell’s type system. Haskell’s type system is also very extensible via language extensions: you can have type families (type-level functions), existential types, multi-parameter type classes, etc.

In terms of type-driven development, Haskell allows extremely sophisticated type-level programs – some Haskell libraries effectively do theorem proving or employ type-level machinery to ensure correctness properties (e.g., using GADTs to enforce a parser consumes exactly the right input, or using phantom types and type classes to ensure units of measure).

Rust, by contrast, is not as type-theoretically powerful: it lacks HKTs and GADTs (though you can simulate some GADT-like behavior with enums and trait objects, it’s not as seamless). Rust’s generics are closer to Haskell type classes, but without higher-kinded polymorphism or the ability to do arbitrary type-level computation (no equivalent of type families). On the other hand, Rust has the ownership system which Haskell does not (Haskell uses garbage collection and doesn’t try to solve memory management via the type system, except in some linear types extension for uniqueness or in uniqueness types in Clean, an offshoot). Rust is also impure (imperative features, mutation allowed under control) whereas Haskell is pure and has a whole type-driven scheme for effects (the IO monad and others). In Haskell, effectful operations are tracked via types (monads, etc.), whereas Rust tracks memory effects (borrowing, mutation) via types but not general I/O or side effects (those are not tracked beyond requiring unsafe for some things).

In practice, Rust code may be less abstractly typed than Haskell code, but often more focused on data layout and mutation safety. Haskell excels at things like making illegal states unrepresentable through algebraic types – something Rust also does well. However, Haskell code can sometimes leverage laziness and types to a higher degree of mathematical abstraction (for example, using a rich lattice of typeclasses for different properties like Monoid, Functor, Applicative, Monad, etc., which have no direct analogue in Rust’s standard library – Rust tends to just use concrete impls rather than abstract algebraic classes).

One key difference is runtime: Haskell has a GC and is optimized for high-level abstraction, which can sometimes incur overhead (though GHC is quite good). Rust promises C-like performance consistently due to zero-cost abstractions. So one might say: if you need the ultimate in type expressiveness and are working in a domain where you can afford GC and don’t need manual memory control, Haskell might let you enforce even more properties at compile time than Rust. But if you need low-level control (like systems programming, embedding into OS or WASM), Rust’s type system is more geared towards those use cases (ownership, no GC) even if it means some things like effect types or extremely fancy generics aren’t present.

Rust vs. OCaml

OCaml is a member of the ML family (like Haskell’s cousin in a way, though not pure). It has a strong static type system with type inference (Hindley-Milner). OCaml’s strengths include a simple and sound type system with algebraic data types, pattern matching, and parametric polymorphism, but it’s not as fancy as Haskell’s – OCaml doesn’t have type classes (though it has a powerful module system and functors for parametric modules, which are somewhat like generics at the module level).

OCaml’s approach to type-driven development is to use ADTs and pattern matching heavily, much like Rust. In fact, Rust’s enums and pattern matching were directly inspired by OCaml (and other MLs). If you are coming from OCaml, Rust’s way of encoding variants and options and results will feel very familiar. OCaml, however, uses a garbage collector and doesn’t have an ownership model. It can’t guarantee absence of data races except by convention (though its memory model and runtime discourage certain patterns, it doesn’t enforce like Rust does). OCaml’s types also are not as expressive in some ways: it has polymorphic variants and objects for dynamic dispatch, but not traits or type classes.

Comparing the two, Rust is like OCaml with manual memory management that’s checked by the compiler (instead of a GC) and with traits instead of structural polymorphism. Rust also has a macro system more powerful than OCaml’s, and a larger emphasis on types for concurrency and safety. But as far as making illegal states unrepresentable, an OCaml programmer would have a very similar approach: define a variant type for each state, use pattern matching, etc. The big difference is an OCaml program can still throw exceptions or have certain errors (like match inexhaustive will throw at runtime unless warnings are heeded, or one might still use null in some corner cases with Obj.magic). Rust eliminates more via type system (no exceptions at all; everything goes through Result, ensuring handling).

OCaml’s module system allows some level of abstraction where you can specify signatures (interfaces) and have functors (functions from modules to modules) which is more static than Rust’s approach (Rust would use traits and generics to achieve similar ends). In some cases, OCaml’s functors can act like a more powerful generics (e.g., generating specialized code for a given module implementation). Rust’s generics are less modular in that sense but more straightforward.

In industry, OCaml has been used for large systems (e.g., Facebook’s Flow, some of Jane Street’s trading systems). Those teams rely on OCaml’s type system to maintain reliability. Rust offers comparable benefits in type safety but with the additional promise of no GC and safe concurrency. It’s telling that some industrial users of OCaml (like certain systems programmers) have shown interest in Rust because it extends the ML ethos into the systems realm.

Rust vs. Scala

Scala is a JVM language that blends object-oriented and functional paradigms, and it has a very rich type system. Scala’s type system includes generics, variance, higher-kinded types, implicits (which can be used to emulate type classes), and even some dependent type-like features via path-dependent types. Scala is known for its powerful implicit mechanism that can auto-derive implementations, which is used heavily in libraries like Cats (for functional programming) to provide Haskell-like type classes. Scala’s type system, being on the JVM, has to interoperate with Java, so it’s not as purely functional as Haskell, but it tries to offer a lot of compile-time safety and expressiveness.

Comparing Scala to Rust: Scala allows some things Rust doesn’t (HKTs, more dynamic dispatch by default since it’s on JVM, union and intersection types in newer versions, etc.). But Scala relies on JVM GC for memory and doesn’t have the concept of ownership. Concurrency in Scala is often handled by either using immutable data (to avoid issues) or by using the actor model (Akka), or simply trusting developers with synchronization. Scala doesn’t prevent data races at the language level – it’s similar to Java there (though you can use @volatile or other tricks, it’s not fundamentally enforced by the type system).

For type-driven development, Scala developers often use the type system to enforce high-level properties (like using traits and abstract types to enforce certain protocols, or using shapeless library for generic programming). There have been efforts to introduce more advanced type features (e.g. Dotty, now Scala 3, has union types, implicit function types, etc., making the type system even more powerful). But this complexity can be a double-edged sword; Scala’s compilation and type inference can be slow or produce very complex error messages when things go wrong. Rust’s type system, while complex in the ownership part, is somewhat simpler in pure type theory terms (no HKTs, etc.), which sometimes results in more tractable compiler errors for generic code, albeit sometimes borrow checker errors are tricky in a different way.

One interesting point: Scala (especially with frameworks like ZIO or Cats Effect) has trended towards doing more compile-time verification of effect management (like checking that you handle all errors, not use blocking calls without marking them, etc., using type wrappers). Rust ensures at compile time that you handle Result errors (since you can’t ignore them easily without unwrap or ? which is explicit). In Java/Scala you could ignore exceptions or use unchecked ones – Rust’s Result forces a more disciplined approach, similar to how Scala’s Try or Either might be used but Rust makes it idiomatic and somewhat mandatory. So in error handling, Rust is perhaps more type-driven by default, whereas Scala gives you both approaches (exceptions or functional error handling).

In terms of performance, Scala’s abstractions can sometimes carry a runtime cost (though the JVM JIT is good at inlining, heavy use of boxed types or typeclass implicits might allocate objects). Rust’s zero-cost goal means you often get better performance in Rust for equivalent algorithms, at the cost of sometimes more work (e.g., Scala’s garbage collector vs Rust’s manual memory management, but safer in Rust).

Rust vs. TypeScript

TypeScript is a gradually-typed superset of JavaScript. It’s quite different from Rust in execution model (TypeScript compiles to JS, runs with GC, etc.) but interesting to compare in terms of type philosophy. TypeScript’s type system is structural and gradual: you can start with plain JS and add type annotations incrementally. It has an extremely powerful type inference and algebraic capabilities (union types, intersection types, mapped types, etc.) mostly geared toward modeling JS patterns and doing compile-time checks, but it is intentionally not sound in all cases. For example, TypeScript will allow some unsound casts or will consider an operation type-correct due to how it widens types, even if at runtime it might fail. The motto is more to help catch common errors, not guarantee absolute absence of type errors.

Rust, in contrast, is fully sound (modulo unsafe). TypeScript might let you treat a number as an object after some casting; Rust will never let you freely reinterpret types without unsafe and explicit action. Rust’s types are nominal (an object’s type is what it is declared as), whereas TypeScript’s are structural (you can treat two objects with the same shape as interchangeable types even if they weren’t declared with the same interface explicitly). This structural typing is great for flexibility but means if you accidentally pass the wrong object with the right fields, TypeScript might accept it, even if semantically it’s not what you intended.

Type-driven development in TypeScript often involves adding interfaces and types to catch mistakes (e.g. ensuring an API returns the expected JSON shape). It improves maintainability, but you often still write unit tests to ensure at runtime things are correct, because the static check isn’t 100% guarantor. In Rust, if it compiles, you have a much stronger guarantee that it won’t panic or misbehave (excluding logic bugs that types can’t catch, of course). Also, Rust’s type system can express things TypeScript’s can’t easily, like lifetimes or ownership (not relevant in GC world) or precise sum types (TypeScript has union types which are similar to Rust enums but without exhaustiveness checking by default – though TypeScript does have discriminated unions which approach that idea).

One commonality is that both communities value tooling: Rust’s compiler and rust-analyzer are analogous to TypeScript’s tsc and language server in giving immediate feedback. Both have seen that good IDE integration makes working with types much more pleasant (Rust’s autocomplete and borrow checker diagnostics, TypeScript’s error messages and auto-imports).

From a usage perspective: you’d use TypeScript for high-level application logic (especially front-end or Node.js) where the ease of a GC and dynamic features are needed, but you still want some static checking. You’d use Rust when you need absolute performance or safety (e.g., building a WebAssembly module for a CPU-intensive task, or a backend service where you want to avoid runtime errors and get closer to the metal for performance). Sometimes they even complement each other (e.g., a TS front-end calling into a Rust-compiled-to-WASM library for speed-critical operations – TS ensures the front-end logic is consistent, Rust ensures the core logic is correct and fast).

Rust vs. Idris/Agda

Idris and Agda are dependently typed languages used primarily in academic and research settings (and for certain sophisticated applications requiring formal proofs). They allow you to state and prove properties about your code within the code itself. For example, in Idris you might write a function sort : (xs : List Int) -> (ys : List Int) ** (Sorted ys && Permutation xs ys), meaning the function returns a list ys that is sorted and is a permutation of the input. Idris would then require you to actually provide a proof or evidence that your implementation meets that spec (often via writing additional functions or using tactics). This is an incredibly strong guarantee – far beyond what Rust’s type system can do – but it’s usually overkill for general programming, and it requires a lot of manual proof work from the developer.

Rust does not attempt to compete in that space at all. If you needed that level of guarantee in something like Rust, you’d integrate with a formal method externally (e.g., write a proof in Coq and then extract to Rust, or use model checking or property tests to gain confidence). Rust’s philosophy is to catch a large class of common errors automatically without involving the developer in theorem proving, and otherwise rely on runtime checks or tests for more complex correctness. In contrast, Idris/Agda push the boundary: any property you care about, encode it in the type and have the compiler check it. The downside is that you, the programmer, often act as the prover when the property isn’t trivial.

From a type-driven development viewpoint, Idris is actually where the term TDD (Type-Driven Development) was explicitly coined (Brady’s book “Type-Driven Development with Idris”). In Idris, you literally write a type signature and interactively refine the program until it compiles, at which point it’s basically correct by construction (for the properties encoded). Rust can’t reach that high bar; it focuses on memory safety, thread safety, and some degree of value safety (e.g., options/results) but doesn’t prove functional correctness of algorithms.

Another practical consideration is that Idris and Agda are not aimed at performance or low-level tasks. They are often used to verify logic and then maybe the code gets translated to Haskell or C++ for actual running, or it’s used in domains where performance is less critical. Rust has to generate efficient machine code for potentially system-level tasks. Ensuring something like sorting is correct via proof in Rust’s context would be too time-consuming and would make typical development impractical. Instead, Rust might encourage writing tests (or using property-based testing to assert that the sort output is sorted and same elements). This combination of strong static typing with runtime testing covers many bases effectively, if not exhaustively.

In summary, Idris and Agda represent the extreme end of the spectrum of type-driven development – where types can express and enforce any invariant, and programs are essentially proofs. Rust draws inspiration from that ethos (we want as many guarantees as feasible), but stops at the boundary of what is automatable and pragmatic for system programming. However, there is some convergence in specific areas: for instance, the idea of refinement types in Rust (Liquid Rust) is bringing a bit of the Idris spirit (automated proving of certain specs) to Rust, but in a way that doesn’t force every developer to be a proof expert.

Rust vs. Other Notable Mentions

- C/C++: It’s worth mentioning as a contrast. C and C++ have static types but weak enforcement of safety (especially C). C++ adds stronger type features (templates,

auto) but retains pitfalls of manual memory management without ownership checking, and undefined behavior. Rust overcomes C++ shortcomings by providing similar performance with much stronger static guarantees. Where C++ relies on smart pointers and guidelines, Rust’s compiler guarantees safety, giving it an edge in reliability. Many migrate components from C++ to Rust for fewer bugs without sacrificing speed. - Go: In systems programming, Go prioritizes simplicity with a simpler static type system (recent generics, no ADTs, GC, data race prevention via convention). Rust prioritizes catching more at compile time, accepting more complexity. Rust vs Go is a trade-off: ease-of-use initially (Go) vs stricter safety (Rust). Rust yields fewer runtime surprises but has a steeper learning curve.

- Java/C#: Nominally statically typed, primarily OOP. They lack strong type-driven philosophies beyond basic interfaces/classes. They allow

null(unless using newer annotations/Optional), rely on runtime exceptions. They are moving towards more type safety (JavaOptional, C# nullable reference types), but Rust is far ahead by design (eliminating null, requiring error handling). They focus on runtime managed memory (GC) and enterprise development ease, not encoding invariants in types. Rust offers an upgrade in safety, feeling like a mix of C++ performance and ML safety.

Each language has its niche. The trend favors strong static typing for maintainability and correctness. Rust applies this to low-level programming, historically dominated by unsafe languages, drawing from C++ (performance), ML (safety), Haskell (traits), and looking towards the future (dependent types).

Empirical Benefits and Limitations of Type-Driven Development in Rust

What does empirical evidence say about using Rust and strong typing?

Benefits: Defect Reduction, Reliability, and Maintainability

- Fewer Memory Safety Bugs: Microsoft reported ~70% of security vulnerabilities stemmed from memory safety issues (cf. Jung et al., POPL 2018). Rust eliminates this class of bugs in safe code. Both Microsoft and Google (Android team) observed zero memory safety vulnerabilities in new Rust components compared to ongoing issues in C/C++ counterparts.

- Improved General Reliability: Mozilla’s Servo engine achieved parallelism and safety difficult in C++. Amazon AWS used Rust for Firecracker (microVMs), citing safety and performance enabling fast startups and strong isolation. Cloudflare, Dropbox (replacing Python for performance), and blockchain projects (Solana, Polkadot) leverage Rust for performance and correctness where bugs are costly.

- Enhanced Maintainability and Refactoring: Studies (e.g., Ray et al., ICSE 2018) suggest static typing improves maintenance in large codebases. In Rust, changing a type definition causes the compiler to flag all affected locations, guiding refactoring safely. This reduces time spent debugging compared to C/C++, despite potentially longer compile times. Teams report higher confidence after compilation.

- Clearer Code and Documentation: Types act as enforced documentation. Rust function signatures clearly state expectations (

Option,Result), unlike languages with implicit nulls or exceptions. Types cannot lie (outsideunsafe), improving predictability. - Safer API Design: Rust encourages designing APIs that are hard to misuse. Libraries like

actix-web(web framework) use types for configuration checks, anddiesel(ORM) verifies SQL queries against the schema at compile time, eliminating entire classes of runtime errors.

Productivity Costs and Learning Curve

- Steep Learning Curve: Ownership and lifetimes are unfamiliar concepts for many programmers. Beginners often struggle with borrow checker errors, requiring a shift in thinking and potentially feeling unproductive initially. Surveys indicate this is a significant barrier to adoption.

- Compile-Time Performance: Rust’s thorough checks (borrow checking, monomorphization, optimization) lead to longer compile times than languages like Go or dynamic languages. This impacts the edit-compile-test loop. Incremental compilation, caching (

sccache), and tools like Rust Analyzer (inline checks) mitigate this, but it remains a trade-off (compile time vs. debug time). - Limitations of Type System: Rust doesn’t prevent all bugs, particularly logic errors or algorithmic flaws. Testing (unit, integration, property-based) is still crucial. Over-reliance (“it compiles, so it’s correct”) can be risky.

- Ergonomic Constraints: The lack of features like HKTs can make certain abstractions (e.g., generic containers) more verbose or awkward than in Haskell/Scala. Rust favors composition over inheritance, which sometimes requires more boilerplate (though macros help). There’s ongoing tension between adding features for convenience and maintaining simplicity.

- Interoperability Challenges: Integrating Rust into existing C/C++ codebases via FFI can be complex, often requiring careful boundary management. Tools like the

cxxcrate help, but mixing languages isn’t as seamless as within a single VM (e.g., Scala/Java) or with gradual typing (TypeScript/JS). - Ecosystem Maturity: While growing rapidly, Rust’s library ecosystem may lack mature solutions in some niche domains compared to older languages like Python or Java. This is improving quickly.

- Adoption Hurdles: Convincing teams to adopt Rust can be challenging due to the perceived learning curve and the cost of rewriting stable systems. Despite being consistently voted “most loved,” Rust adoption in practice faces inertia.

In summary, Rust’s type-driven approach empirically delivers on safety and maintainability, especially for systems programming. The costs (learning curve, compile times) are real but often decrease as developers gain experience and tooling improves. The trend is positive, with ongoing efforts to lower barriers to entry.

Applications and Case Studies

Type-driven development in Rust finds application across diverse domains:

- Systems Programming and Operating Systems:

- Theseus OS: Experimental OS using Rust types for runtime safety and state management.

- Linux Kernel: Adopted Rust for drivers (starting Linux 6.x) to reduce memory safety bugs common in C drivers.

- Google Fuchsia OS: Uses Rust extensively for components, citing faster development of reliable code.

- Embedded Systems:

- Drone OS, Tock OS: Use Rust for safe code on microcontrollers, leveraging type safety and hardware isolation.

- Hardware Drivers: Typestate pattern commonly used (e.g., GPIO pin as

InputPinorOutputPintype) to prevent misuse at compile time. - Critical Systems: Aerospace/automotive exploring Rust for safety-critical firmware (e.g., Ferrous Systems work, aiming for ISO 26262 compliance).

- Web Servers and Services:

- Cloudflare Workers: Rust used for security and performance in the edge runtime infrastructure.

- Dropbox: Replaced Python components with Rust for performance-critical storage logic, avoiding concurrency bugs.

- AWS, Discord, Facebook: Use Rust for high-performance, reliable backend services.

- Web Frameworks (Actix, Rocket): Employ types for route parameter validation and mandatory error handling (

Result).

- Blockchain and Smart Contracts:

- Parity/Substrate (Polkadot): Chose Rust for speed and safety in blockchain implementation.

- Solana: Uses Rust as the primary language for high-performance, safer smart contracts.

- Move Language (Diem/Libra): Inspired by Rust’s affine types to ensure resource safety in smart contracts.

- Concurrent and Parallel Computing:

- Rayon Library: Provides safe data parallelism leveraging Rust’s

Send/Synctraits to prevent data races. - Servo Browser Engine: Demonstrated safe parallel DOM rendering, influencing Firefox Quantum.

- Rayon Library: Provides safe data parallelism leveraging Rust’s

- Security-Critical Systems:

- Cryptography Libraries (ring, Rustls): Offer memory safety advantages over C implementations (avoiding bugs like Heartbleed). Microsoft exploring Rustls for Windows TLS.

- Static Analysis (MIRAI): Tools built by companies like Meta (Facebook) augment Rust’s type checks with custom assertions for higher security assurance.

- WebAssembly and the Web:

- Rust is a popular choice for WASM due to performance, small binaries, and safety guarantees carrying over to the web sandbox.

- Used for performance-critical web components (e.g., game physics engines, codecs, image processing libraries).

- Finance and High Reliability Business Logic:

- High-Frequency Trading (HFT): Used for low-latency systems seeking C++ speed without the associated memory safety risks.

- Digital Asset Platforms: Influence seen in languages like Move designed for asset safety. Exploration in traditional finance for core services.

These examples show Rust excelling where correctness, safety, and performance are paramount, justifying the upfront investment in its type system.

Getting Started with Type-Driven Development in Rust

Follow these steps to adopt a type-driven approach in Rust:

- Learn Rust Fundamentals: Master ownership, borrowing, lifetimes, and standard types like